CBA ItemBuilder Workshop: Session 16

Ulf Kroehne

Paris, 2022/11/07 - 2022/11/08

Session 16: Log-Data

Overview Session 16

Log-Data

- Terminology

- Trace Debug Window

Contextualized Log Events

- Timed Actions

- States

Process Indicators

- Examples

- Validation

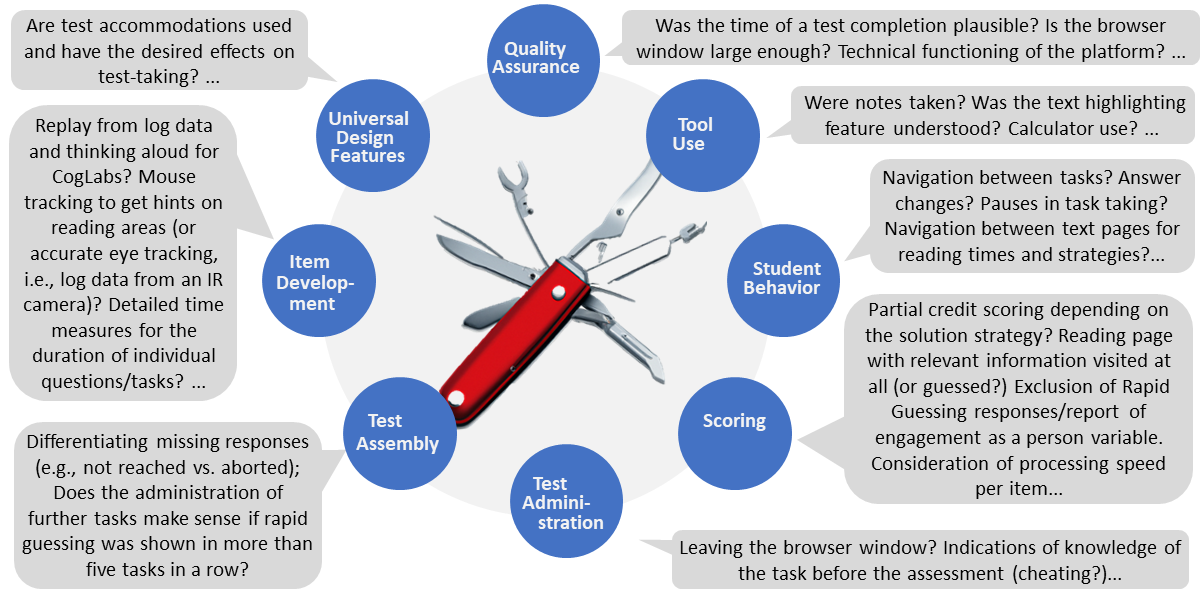

Potential Use of Log-Events

Log-Data are typically investigated After Assessments, but can also be used During the Assessment if considered already during item development.

Introduction

Starting Point

Raw Log Events: Basic information logged

by a specific assessment software- Clicking

- Typing

- Scrolling

- …

Promise

- Log data might provide insights into test-taking processes and underlying cognitive, emotional and meta-cognitive processes.

The terms log data / log file data are initially not specific to assessments. Logging of information (e.g., server logs, system event logs, etc.) is used by software developers in all kinds of software.

Single events can inform about WHAT happened (e.g., a page was changed), WHEN (e.g., at time \(t\)) and WHY (because of a timer).

Raw Log Events are platform specific (i.e., what is logged when using, for instance, the CBA ItemBuilder is defined by the programmers of the CBA ItemBuilder).

Terminology

Application

Contextualized Log-Events: Actions that indicate specific behavior within Tasks

- Taking notes

- Using the calculator

- Requesting additional information

- …

What is actually frequently used in empirical log-data analyses, for instance, using large-scale assessment data, are contextualized log events.

- Occurrence of specific behavior (i.e., frequency of events), for instance, visiting a particular task-relevant page

- Time measures (e.g., time differences between events), like Time on Task and it’s relationship to success

- Sequence of (timed) actions (e.g., reading before answering, successful strategies etc.)

Contextualized Log-Events are task-specific (i.e., a log-event for a specific behavior, such as, using-the-calculator is only relevant in very specific tasks, in which a digital calculator is provided).

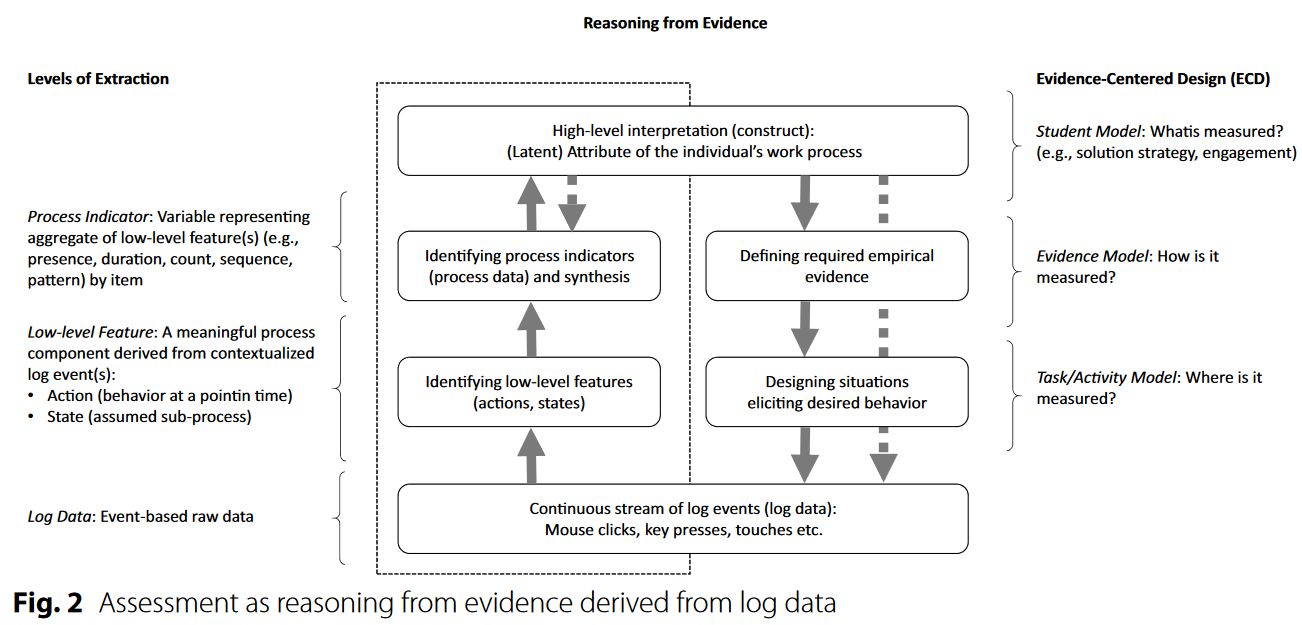

Conceptual Challenge

Platform-specific Raw Log Events need to be translated into task-specific Contextualized Log-Events.

Two Problems

Same task can be implemented using different software (e.g. CBA ItemBuilder and a custom implementation as PCI component):

- How to make sure that comparable events are used when interpreting differences in the test-taking processes?

- Task-specific arguments must be integrated to find (research) and interpret (diagnostic use / application) differences in test-taking processes:

- How to make sure that domain knowledge (i.e., references to the assessment frameworks) is acknowledged when interpreting difference in the test-taking processes?

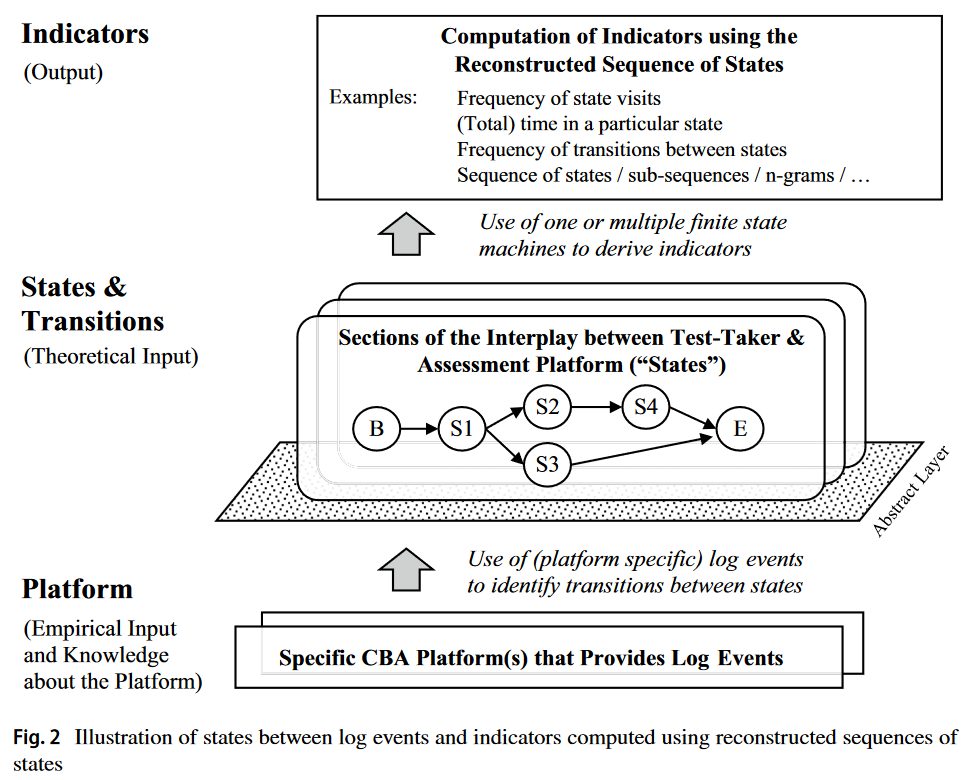

Feature Extraction

Low-Level Features

are identified with the help of technical platform-specific raw log events

are defined with reference to the assessment framework

- are either Actions (with timestamp) or

- States (with duration, i.e. two timestamps)

Contextualized Log Events are Actions, i.e. events within states with a specific interpretation.

Process Indicators

Process indicators (variables representing aggregates at the person or person x item level) are the theoretically and empirically meaningful information derived from log data.

- Are created from low-level features (i.e. actions and states)

- Based on the technical log events (raw log events)

- Theoretical foundation (e.g., via Evidence-Centered Design, ECD)

Validation of Process Indicators

Comparability (and reproducibility in the sense of Open Science) of process indicators as a prerequisite for building empirical evidence (i.e., validation).

Content knowledge (i.e., expertise) required to describe meaningful Low-Level Features (and to link them with any raw log events).

Problem 1 (Comparability):

- Process Indicators are comparable, if created from low-level features with identical interpretation (i.e., Validity Arguments).1

Problem 2 (Domain Knowledge):

- Assessment Frameworks are used to formulate Validity Arguments for low-level features.

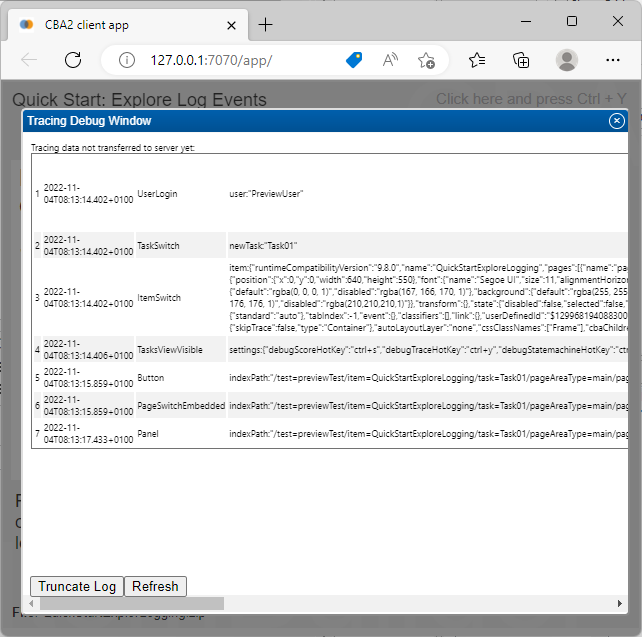

Raw Log Events

The naming and semantics of raw log events are platform and software-specific (i.e., arbitrarily defined to some extent by the programmers of the CBA ItemBuilder).

The CBA ItemBuilder automatically collects raw log events:

Test-Level

UserLogin(or something related with authentication)TaskSwitch/ItemSwitch/TaskViewVisible(events notifying that the Task was loaded and rendered)

Task-Level

PageSwitchTopLevel/PageSwitchEmbedded/ … (events notifying about pages changes)Panel/ImageField/Button/ … (events notifying about user-interactions with any of the components)

…

- Many (see tables…)

Tracing Debug Window

For learning about Log-Events, the Preview provides the Tracing Debug Window that lists all recently collected Log Events of the currently running item.

During Preview

Recent events (at runtime) can be debugged in the Preview

Strg/Ctrl + Y is the (default) hotkey for the Tracing Debug Window

Hotkey configured in the Preference of the CBA Preview (main menu

Utilities>Open preferences)Find a short introduction here.

Completeness of Log-Event Data (1)

Response completeness

- all final answers are logged

Result data (i.e., the raw results in terms of final answers and the entered text) can be derived from log data. This criterion means that the result dataset can be reconstructed from the log data (and the items). Note that additional steps might be necessary to apply scoring rules to obtain a dataset with scored responses.

- The separation in log data versus result data becomes obsolete (and log data are sufficient).

Progress completeness

- all answer-changes are logged

Result data can be derived from the log data as described for response completeness, but at any possible point in time. If this requirement is fulfilled, multiple result datasets can be created, each containing the final responses at the moment the assessment was hypothetically interrupted. This criterion requires that all events informing about an answer change are available.

- This criterion implies complete response times.

Completeness of Log-Event Data (2)

Replay completeness

- a screen cast can be created

Visual replay (i.e., a screen-cast that shows what was visible on screen and when) can be reconstructed from the collected log events. Requires that everything that was visible on screen and everything that changed visibility of content is logged.

- The CBA ItemBuilder aims to collect complete log data regarding this criterion.

State completeness

- a-priori defined actions and states can be identified

With respect to a concrete set of actions and states all log data are available to identify the transitions between states (or the occurrence of actions). If this condition is useful to describe the completeness of the log data gathered in the particular assessment, please list the relevant actions and states that can be identified using the log data.

- This criterion is most relevant for item authors when designing items.

Re-Play of Items using Log Data

Illustration of Replay Completeness

Goals

- Give insights into test-taking process by replay

- Implement automatic tests (using real test-taking behavior)

- Proof completeness of log data

The item shown on this page with the embedded video shows the experimental preview of the replay feature that is under development.

Example for Low-Level Features

Response times for questionnaires with multiple questions per screen (i.e. Item-Batteries) are not naturally defined, but can be constructed from log events.

Summary for Item-Authors

Item Design

- Purposeful control the visibility of content

If a test-taker notices a specific part of the stimulus or item can be made traceable for content that is only visible after specific (logged) user interactions.

- Allow and log construct-relevant strategies

Test-takers should be able to apply strategies and knowledge when solving the tasks as required for authentic assessments (face validity). When possible, allow choosing between different ways to solve the task.

Test Design

- Avoid construct-irrelevant freedom

Example: A “free” navigation between tasks, i.e. leaving and re-entering tasks, complicates the interpretation of editing processes and is not necessarily required if test-wiseness is not to be measured.

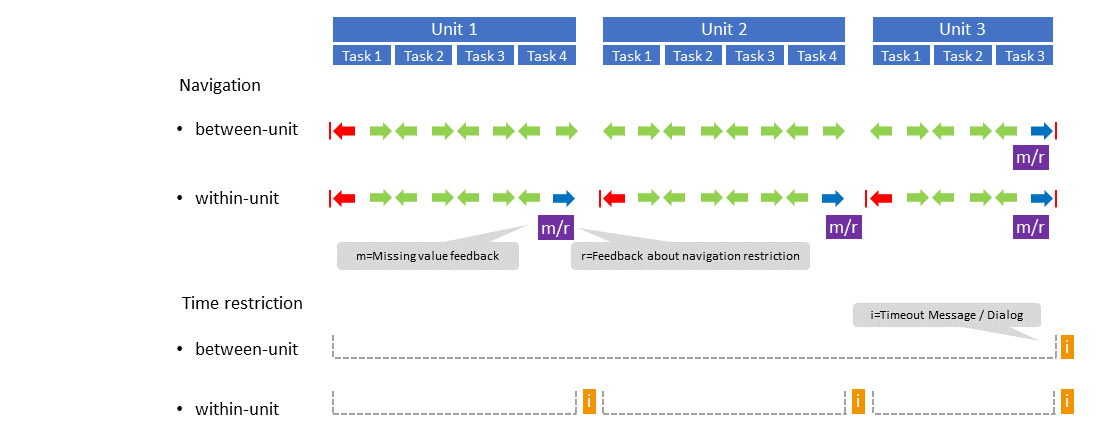

Suggestions for Time Limits and Feedback

- Time limits

Consider Unit-Level time limits.

- Feedback

Provide feedback about potential navigation restrictions and missing values.

End of Session 16: Log-Data

Helpful to remember

- Log-Data will be collected automatically.

Task for item authors

Think about possibilities to use them for diagnostic purposes while designing the items.

Low-level features with duration (States):

- What are meaningful phases (e.g., exploration, reading, answering, verification etc.)

- What triggers the start (and the end) of the particular part (i.e., which events can define the Transition between States)?

Low-level feature with timestamp only (Actions / Contextualized Events)

- Which raw log events identify the particular Action?

- In which sections (i.e., Sates) of an assessment can particular Actions occur?

- What, if something is not possible with the CBA ItemBuilder? Learn more about embedding HTML/JavaScript content in session 17.